×

![]()

bienst1: Instance-to-Instance Comparison Results

| Type: | Instance |

| Submitter: | MIPLIB submission pool |

| Description: | Imported from the MIPLIB2010 submissions. |

| MIPLIB Entry |

Parent Instance (bienst1)

All other instances below were be compared against this "query" instance.  |

|

|

|

|

|

|

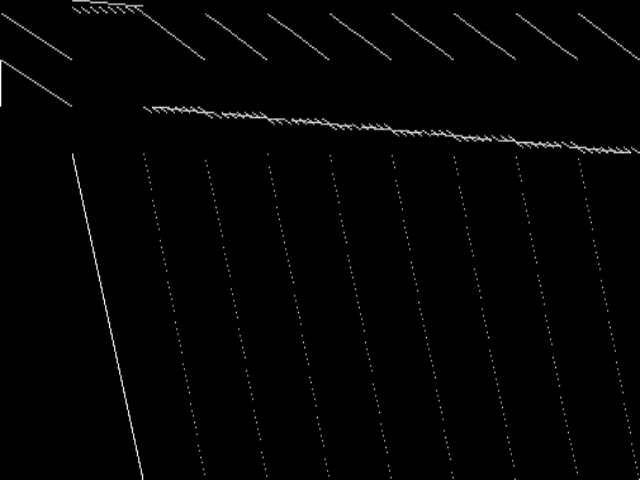

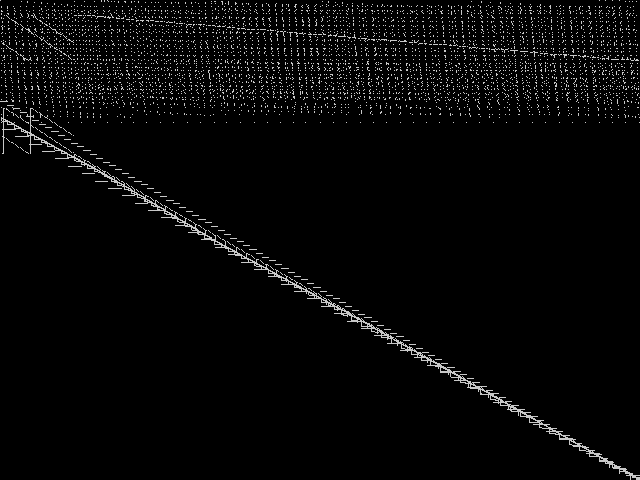

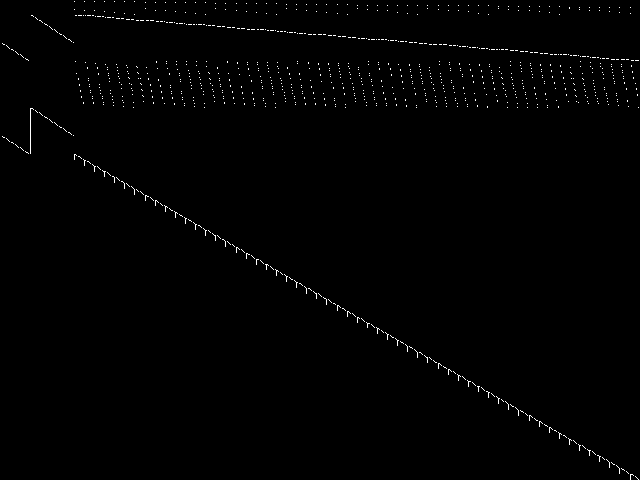

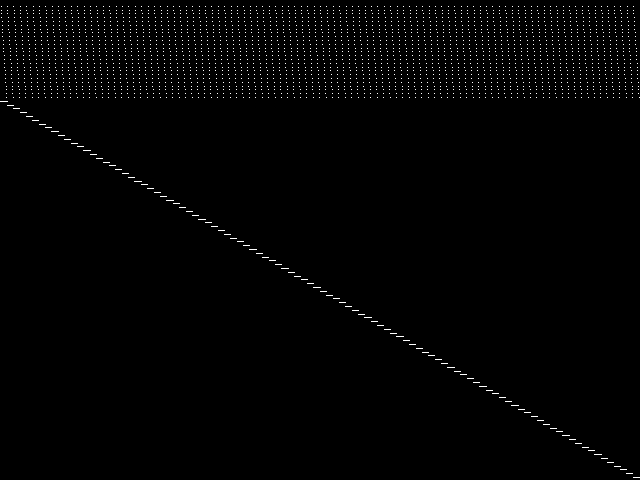

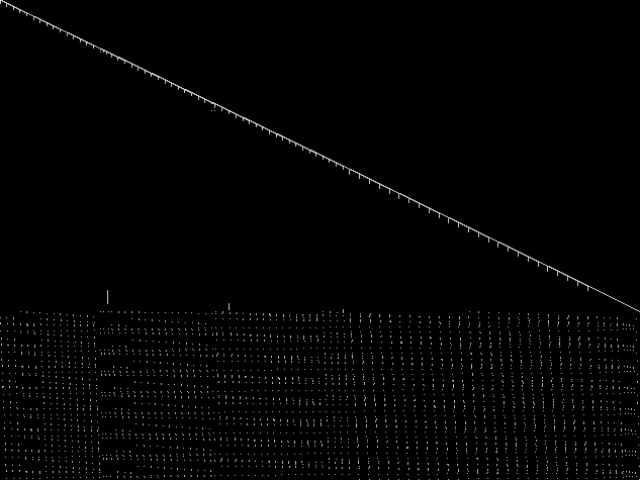

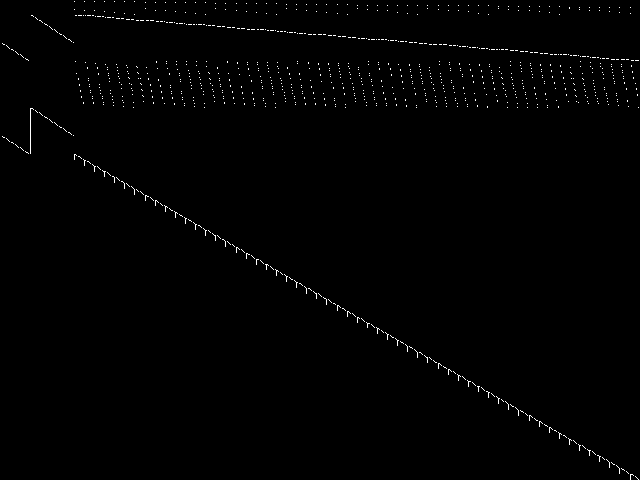

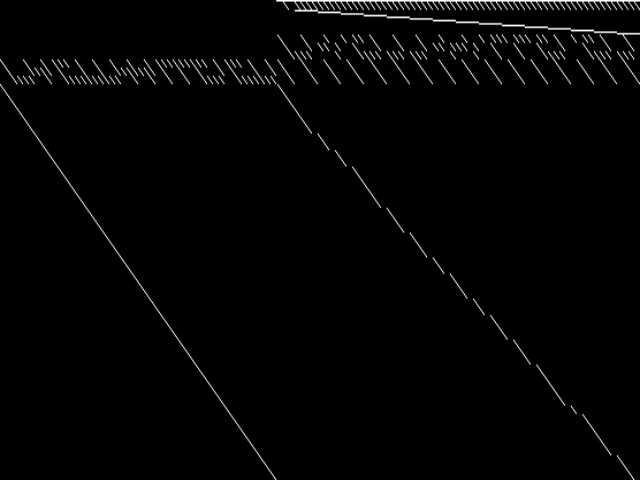

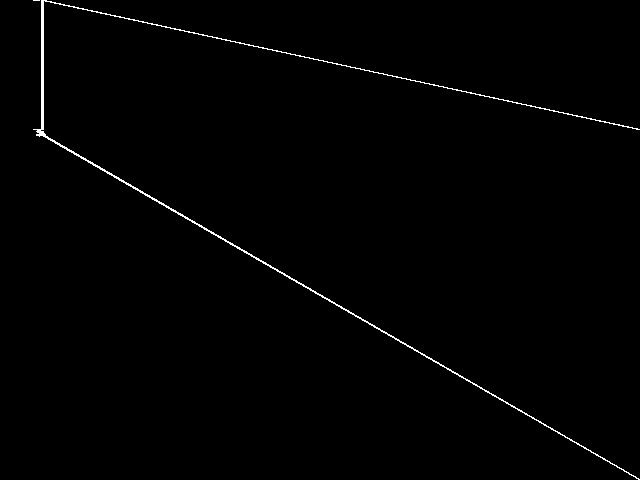

Raw

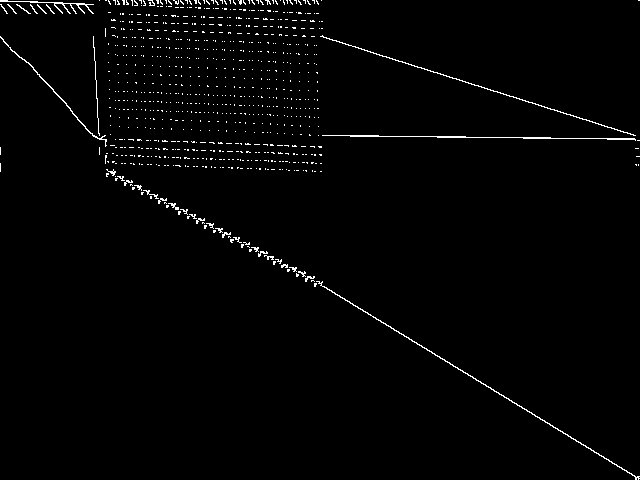

This is the CCM image before the decomposition procedure has been applied.

|

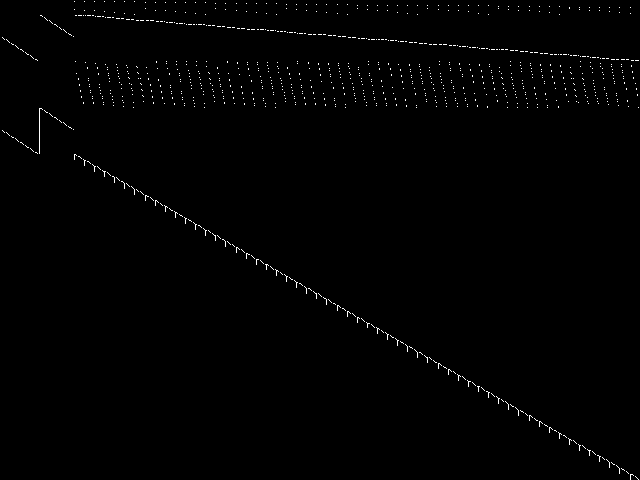

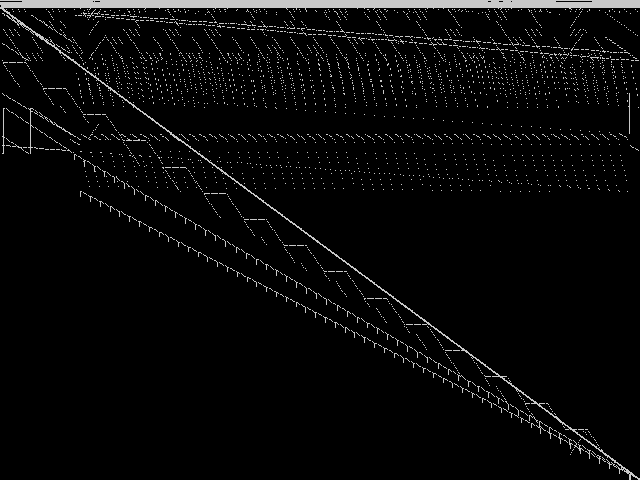

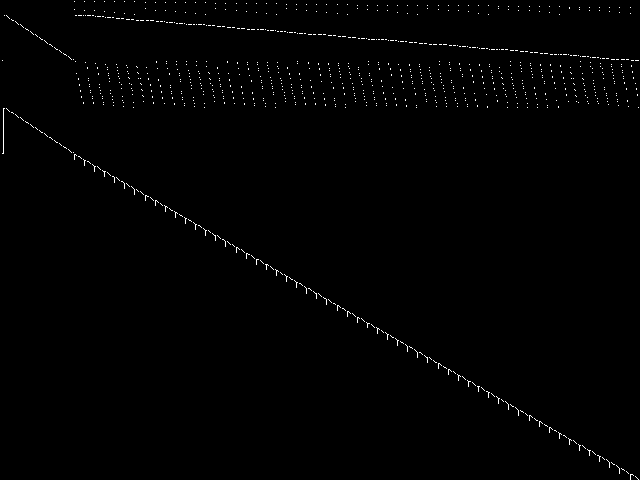

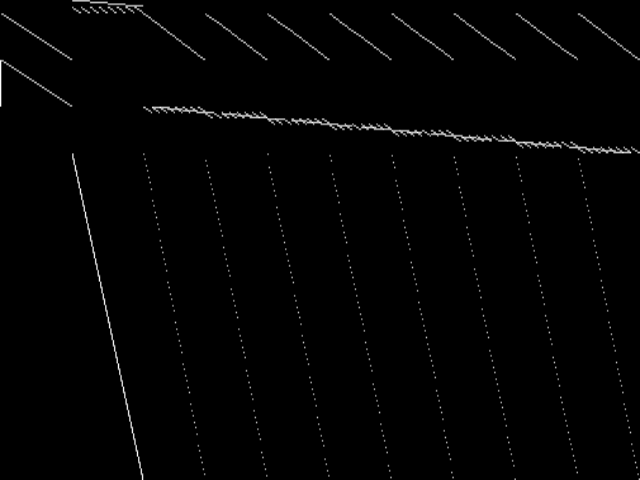

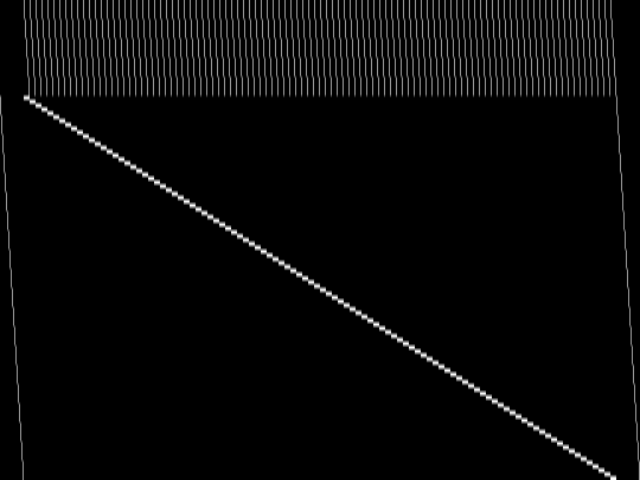

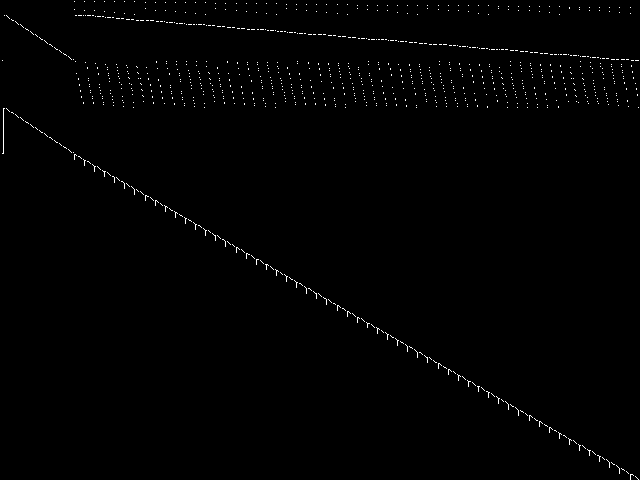

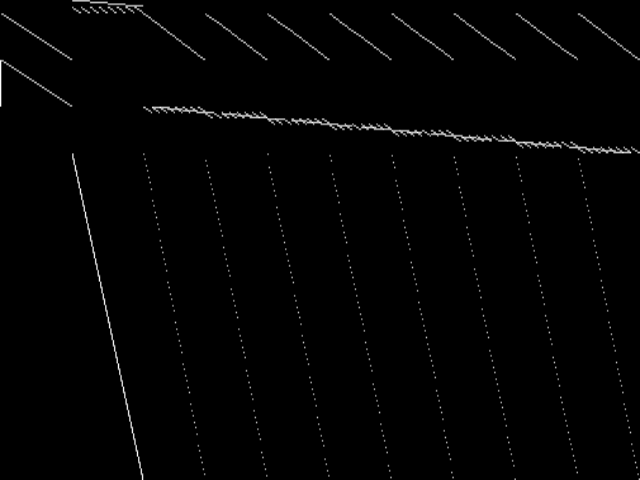

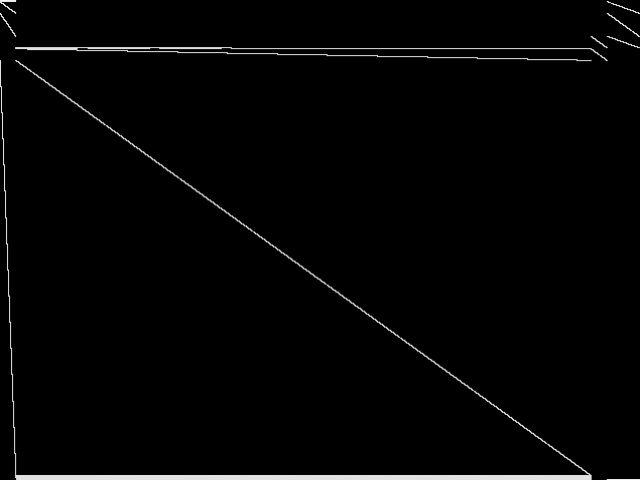

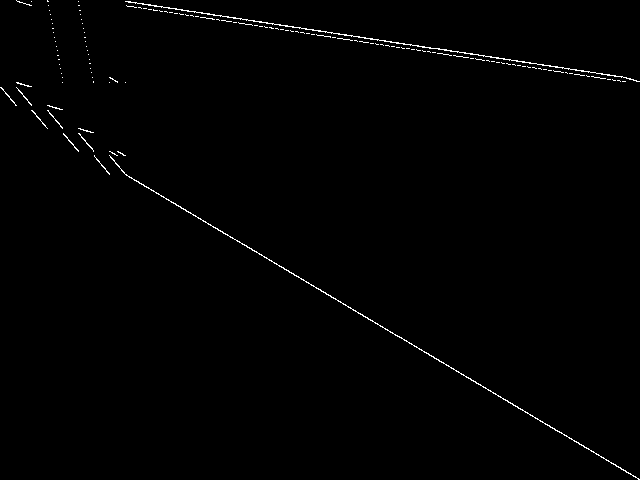

Decomposed

This is the CCM image after a decomposition procedure has been applied. This is the image used by the MIC's image-based comparisons for this query instance.

|

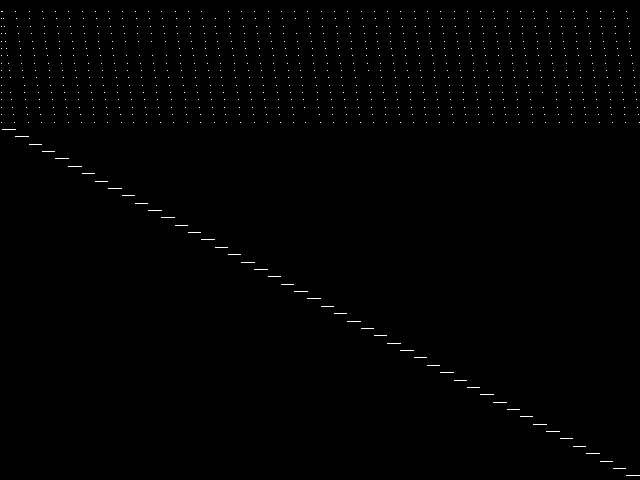

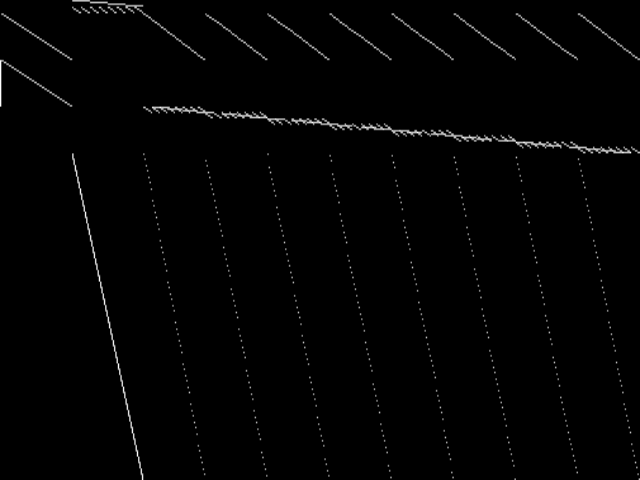

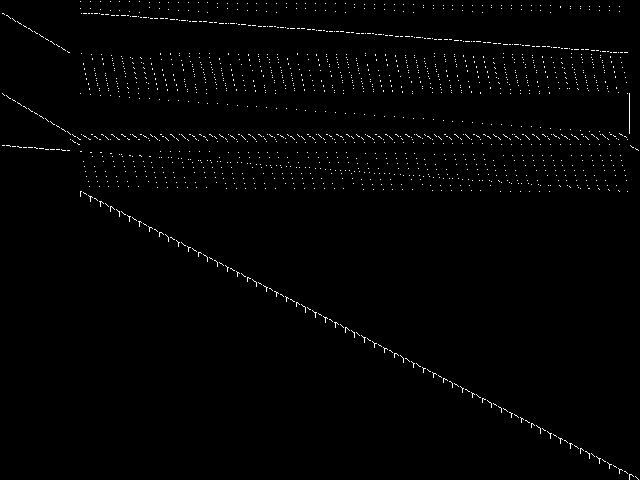

Composite of MIC Top 5

Composite of the five decomposed CCM images from the MIC Top 5.

|

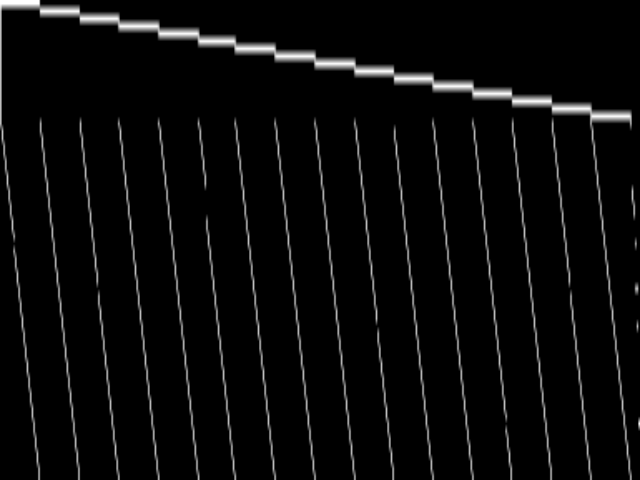

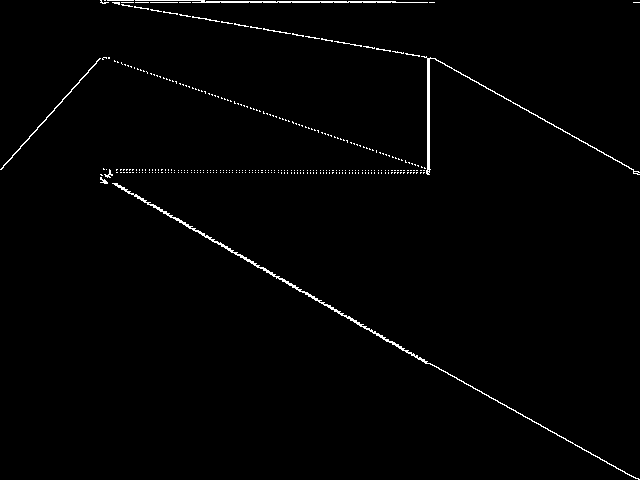

Composite of MIPLIB Top 5

Composite of the five decomposed CCM images from the MIPLIB Top 5.

|

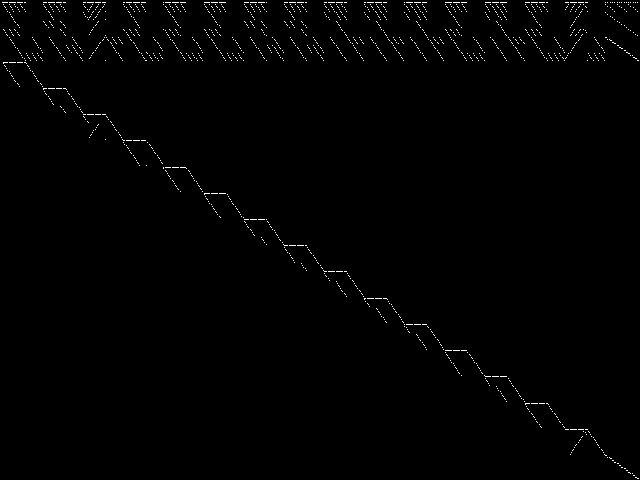

Model Group Composite Image

Composite of the decomposed CCM images for every instance in the same model group as this query.

|

MIC Top 5 Instances

These are the 5 decomposed CCM images that are most similar to decomposed CCM image for the the query instance, according to the ISS metric.  |

Decomposed

These decomposed images were created by GCG.

|

|

|

|

|

|

| Name | bienst2 [MIPLIB] | newdano [MIPLIB] | opt1217 [MIPLIB] | supportcase4 [MIPLIB] | pg [MIPLIB] | |

|

Rank / ISS

The image-based structural similarity (ISS) metric measures the Euclidean distance between the image-based feature vectors for the query instance and all other instances. A smaller ISS value indicates greater similarity.

|

1 / 0.131 | 2 / 0.232 | 3 / 0.532 | 4 / 0.541 | 5 / 0.594 | |

|

Raw

These images represent the CCM images in their raw forms (before any decomposition was applied) for the MIC top 5.

|

|

|

|

|

|

MIPLIB Top 5 Instances

These are the 5 instances that are most closely related to the query instance, according to the instance statistic-based similarity measure employed by MIPLIB 2017  |

Decomposed

These decomposed images were created by GCG.

|

|

|

|

|

|

| Name | bienst2 [MIPLIB] | newdano [MIPLIB] | danoint [MIPLIB] | rout [MIPLIB] | ns1430538 [MIPLIB] | |

|

Rank / ISS

The image-based structural similarity (ISS) metric measures the Euclidean distance between the image-based feature vectors for the query instance and all model groups. A smaller ISS value indicates greater similarity.

|

1 / 0.131 | 2 / 0.232 | 59 / 1.007 | 79 / 1.064 | 227 / 1.303 | |

|

Raw

These images represent the CCM images in their raw forms (before any decomposition was applied) for the MIPLIB top 5.

|

|

|

|

|

|

Instance Summary

The table below contains summary information for bienst1, the five most similar instances to bienst1 according to the MIC, and the five most similar instances to bienst1 according to MIPLIB 2017.

| INSTANCE | SUBMITTER | DESCRIPTION | ISS | RANK | |

|---|---|---|---|---|---|

| Parent Instance | bienst1 [MIPLIB] | MIPLIB submission pool | Imported from the MIPLIB2010 submissions. | 0.000000 | - |

| MIC Top 5 | bienst2 [MIPLIB] | H. Mittelmann | Relaxed version of problem bienst | 0.130710 | 1 |

| newdano [MIPLIB] | Daniel Bienstock | Telecommunications applications | 0.231517 | 2 | |

| opt1217 [MIPLIB] | MIPLIB submission pool | Imported from the MIPLIB2010 submissions. | 0.532184 | 3 | |

| supportcase4 [MIPLIB] | Michael Winkler | MIP instances collected from Gurobi forum with unknown application | 0.540729 | 4 | |

| pg [MIPLIB] | M. Dawande | Multiproduct partial shipment model | 0.594347 | 5 | |

| MIPLIB Top 5 | bienst2 [MIPLIB] | H. Mittelmann | Relaxed version of problem bienst | 0.130710 | 1 |

| newdano [MIPLIB] | Daniel Bienstock | Telecommunications applications | 0.231517 | 2 | |

| danoint [MIPLIB] | Daniel Bienstock | Telecommunications applications | 1.007354 | 59 | |

| rout [MIPLIB] | MIPLIB submission pool | Imported from the MIPLIB2010 submissions. | 1.063926 | 79 | |

| ns1430538 [MIPLIB] | NEOS Server Submission | Instance coming from the NEOS Server with unknown application. | 1.302722 | 227 |

bienst1: Instance-to-Model Comparison Results

| Model Group Assignment from MIPLIB: | no model group assignment |

| Assigned Model Group Rank/ISS in the MIC: | N.A. / N.A. |

MIC Top 5 Model Groups

These are the 5 model group composite (MGC) images that are most similar to the decomposed CCM image for the query instance, according to the ISS metric.  |

These are model group composite (MGC) images for the MIC top 5 model groups.

|

|

|

|

|

|

| Name | pizza | mc | neos-pseudoapplication-74 | mario | neos-pseudoapplication-97 | |

|

Rank / ISS

The image-based structural similarity (ISS) metric measures the Euclidean distance between the image-based feature vectors for the query instance and all other instances. A smaller ISS value indicates greater similarity.

|

1 / 1.225 | 2 / 1.252 | 3 / 1.330 | 4 / 1.372 | 5 / 1.378 |

Model Group Summary

The table below contains summary information for the five most similar model groups to bienst1 according to the MIC.

| MODEL GROUP | SUBMITTER | DESCRIPTION | ISS | RANK | |

|---|---|---|---|---|---|

| MIC Top 5 | pizza | Gleb Belov | These are the models from MiniZinc Challenges 2012-2016 (see www.minizinc.org), compiled for MIP WITH INDICATOR CONSTRAINTS using the develop branch of MiniZinc and CPLEX 12.7.1 on 30 April 2017. Thus, these models can only be handled by solvers accepting indicator constraints. For models compiled with big-M/domain decomposition only, see my previous submission to MIPLIB.To recompile, create a directory MODELS, a list lst12_16.txt of the models with full paths to mzn/dzn files of each model per line, and say$> ~/install/libmzn/tests/benchmarking/mzn-test.py -l ../lst12_16.txt -slvPrf MZN-CPLEX -debug 1 -addOption "-timeout 3 -D fIndConstr=true -D fMIPdomains=false" -useJoinedName "-writeModel MODELS_IND/%s.mps" Alternatively, you can compile individual model as follows: $> mzn-cplex -v -s -G linear -output-time ../challenge_2012_2016/mznc2016_probs/zephyrus/zephyrus.mzn ../challenge_2012_2016/mznc2016_p/zephyrus/14__8__6__3.dzn -a -timeout 3 -D fIndConstr=true -D fMIPdomains=false -writeModel MODELS_IND/challenge_2012_2016mznc2016_probszephyruszephyrusmzn-challenge_2012_2016mznc2016_probszephyrus14__8__6__3dzn.mps | 1.224657 | 1 |

| mc | F. Ortega, L. Wolsey | Fixed cost network flow problems | 1.251726 | 2 | |

| neos-pseudoapplication-74 | Jeff Linderoth | (None provided) | 1.330012 | 3 | |

| mario | Gleb Belov | These are the models from MiniZinc Challenges 2012-2016 (see www.minizinc.org), compiled for MIP WITH INDICATOR CONSTRAINTS using the develop branch of MiniZinc and CPLEX 12.7.1 on 30 April 2017. Thus, these models can only be handled by solvers accepting indicator constraints. For models compiled with big-M/domain decomposition only, see my previous submission to MIPLIB.To recompile, create a directory MODELS, a list lst12_16.txt of the models with full paths to mzn/dzn files of each model per line, and say$> ~/install/libmzn/tests/benchmarking/mzn-test.py -l ../lst12_16.txt -slvPrf MZN-CPLEX -debug 1 -addOption "-timeout 3 -D fIndConstr=true -D fMIPdomains=false" -useJoinedName "-writeModel MODELS_IND/%s.mps" Alternatively, you can compile individual model as follows: $> mzn-cplex -v -s -G linear -output-time ../challenge_2012_2016/mznc2016_probs/zephyrus/zephyrus.mzn ../challenge_2012_2016/mznc2016_p/zephyrus/14__8__6__3.dzn -a -timeout 3 -D fIndConstr=true -D fMIPdomains=false -writeModel MODELS_IND/challenge_2012_2016mznc2016_probszephyruszephyrusmzn-challenge_2012_2016mznc2016_probszephyrus14__8__6__3dzn.mps | 1.372282 | 4 | |

| neos-pseudoapplication-97 | Jeff Linderoth | (None provided) | 1.378048 | 5 |